How To Participate

-

Create an account on Codalab and register for the shared task in the “participate” tab.

-

Join the task Google group or Slack group. If you have questions, reach out to the task organizers. The organizers will respond as quickly as possible. Also, follow us on Twitter for the latest updates @AfriSenti2023.

-

Participants can form a team (and name the team) with multiple people or a single-person team. If you are a team, you can only make submissions from the team codalab account (so, each team must use one CodaLab account).

-

A participant can be involved in exactly one team (no more). If there are reasons why it makes sense for you to be on more than one team, then email us before the evaluation period begins. In special circumstances, this may be allowed.

-

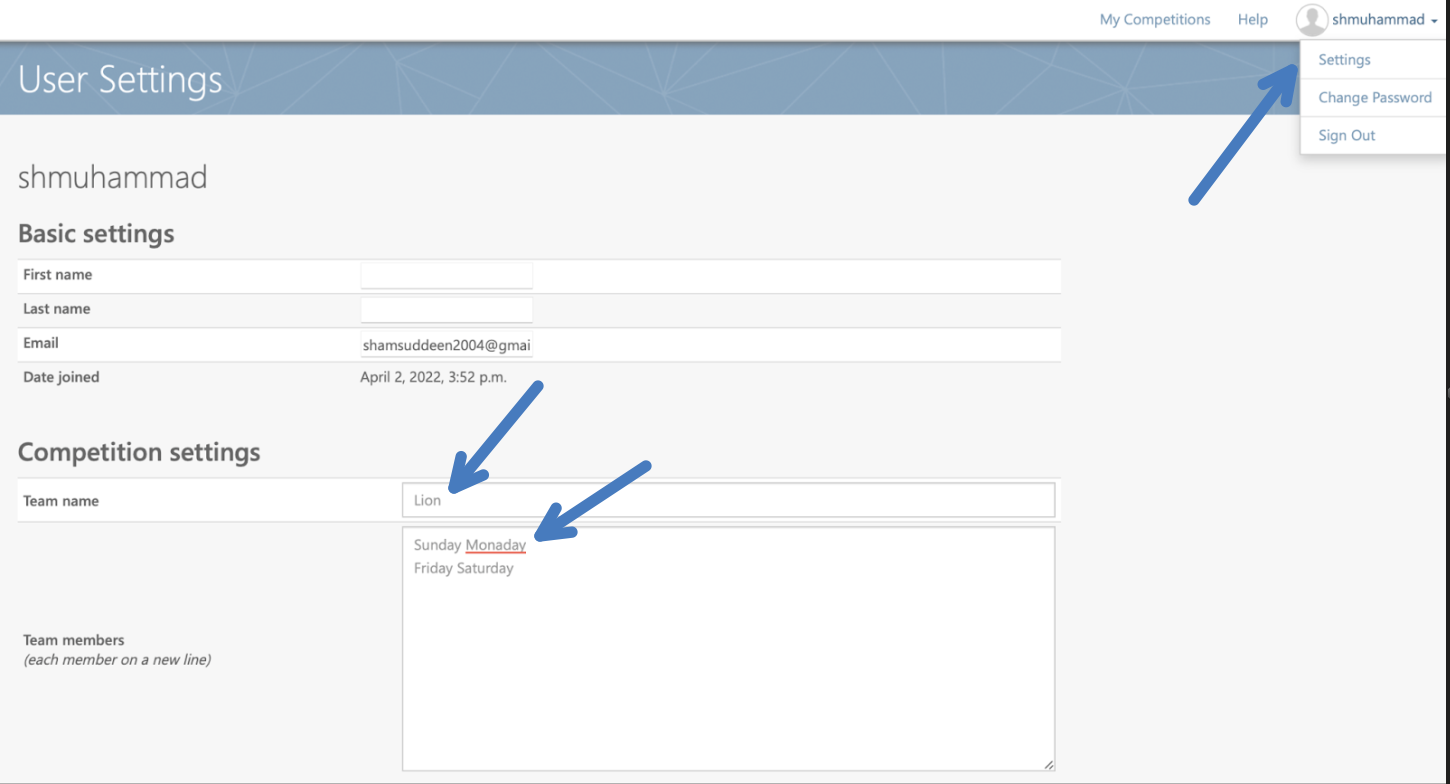

Update your profile, add a team name (e.g., Lion) and enter the names of team members. To do so, go to "Competition Settings" under Settings. For example, the user "shmuhammad".

-

Read information about the task on all the competition pages (Evaluation, terms and conditions, submission format, schedule, and Prizes).

-

Competition Phases: The competition has two phases. Development phase (Phase 1) and Test phase (Phase 2).

-

The development phase (Phase 1): At this phase, training data (with gold label) and development data (without gold label) is released. Participants can train and evaluate their model on the train set and dev set (the dataset for this phase is released). Using any additional external data to train a model is allowed (unconstrained). However, If you are using any external data, make sure it is public data or release it immediately after the submission of your system description paper.

-

The test phase (Phase 2): At this phase, test data (without a gold label) will be released. Participants will finally evaluate their developed model on the test data. The test data will be released on January 20 January 2023 and the evaluation ends on January 31 2023.

-

Decide which Sub-task you want to participate in. You may choose one or all (i.e., sub-task A, sub-task B, and sub-task C). In each sub-task also, participants may wish to participate in one or more tracks. Note: To win a competition, you must participate in all tracks in a particular sub-task.

-

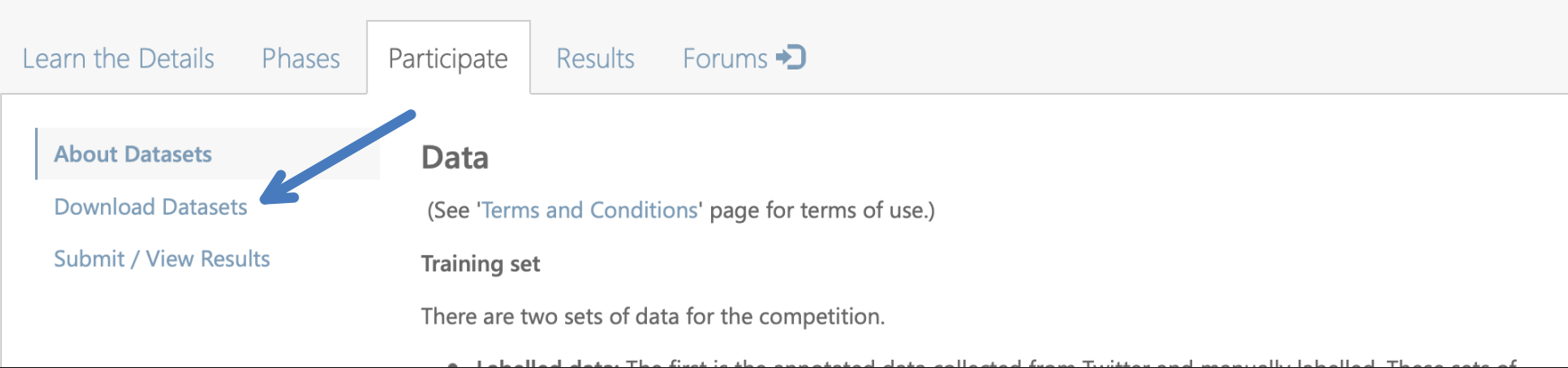

Download competition data: The competition dataset contains all the data for the competition. Training, development and test (when released). To download the dataset, go to “Participate” tab and click “Download Datasets” as shown below. The data is also available at the competition GitHub page

-

Before running your experiments, walk through the Google Colab Notebook to create a baseline experiment and generate the submission file (next step explain how to make a submission). Find more about the Colab Notebook via our GitHub page.

-

Make submission of baseline experiments results (optional)

- Read the "Submission format" tab to see the submission file format.

- For example, to submit for Hausa language (ha), your submission file should be (pred_ha.tsv) and zipped (the name of the zipped file can be anything).

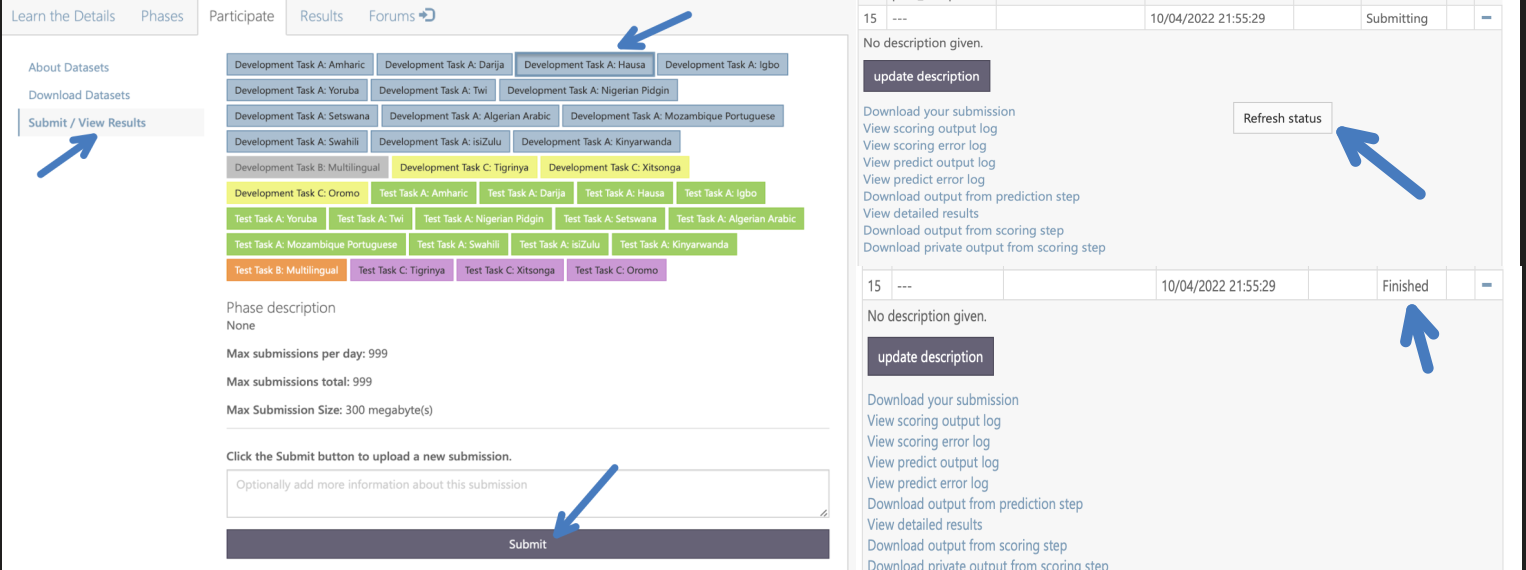

- Go to the "Participate tab", click "Submit/View Result" and select "Development Task A: Hausa". Click "Submit button", select the zipped file and wait a few moments for the submission to execute. Click the refresh button to check the status

- If the submission is successful, you will see "Finished". If unsuccessful, check the error log, fix the format issue (if any) and resubmit the updated zip file

- Click "View detailed results" to see your system score (Precision, Recall and Macro-F1).

- If you still have issues, then contact the task organizers.

-

Make a submission on the dev set (Phase 1 or Develpement Phase

- This phase has already started.

- The procedure is similar to the previous step (submission of baseline prediction).

- You can make multiple submissions in this phase (as many as 999 submissions).

-

Make a submission on the test set (Phase 2 or Evaluation phase)

- This phase (evaluation period) starts on 10th January, 2023.

- Test set without a gold label will be released.

- The procedure is similar to that on the dev set. These differences below apply.

- The leaderboard will be disabled and you will not be able to see results of your submission on the test seuntil the end of the evaluation period.

- You can still see if your submission was successful or resulted in some error.

- In case of error, you can view the error log.

- The number of submissions allowed per team is restricted to 3. However, only your final valid submission will be your official submission to the competition.

- Once the competition is over, we will release the gold labels and you will be able to determine results on various system variants you may have developed. We encourage you to report results on all of your systems (or system variants) in the system-description paper. However, we will ask you to clearly indicate the result of your official submission.

- We will make the final submissions of the teams public at some point after the evaluation period.

- After the evaluation phase, the best teams in each sub-task will win a prize. Winners are expected to share their approach in the competition to be eligible for the prize. Check "AfriSenti Prize" for more information.

- All teams that participated and submitted a result on test data are encouraged to submit a system description paper that describes their submission system. We will provide a mentorship session by Sebastian Ruder and Nedjma Djouhra on how to write a system description paper (we will announce the date).

- Task participants will be assigned another team’s system description papers for review, using the Open Review. The papers will thus be peer-reviewed.

- Attend the SemEval2023 workshop (the location will be announced). If you are a student and submitted a system description paper, you can apply for the Google Conference Travel Award which provides a maximum of $3,000 in travel support. We will guide you to apply and get the grant.

Walkthrough on How To Participate

We prepare and record walkthrough videos explaining the competition, how to run the Colab baseline experiments and submission to Codalab.

- How to participate in the shared task.

- How to run baseline experiment using Colab we provided.

- How to make a submission using the result from the Colab baseline experiment.

Some resources for beginners in sentiment analysis

- Sentiment Analysis with BERT and Transformers by HugginFace using Pytorch and Python (include Youtube videos)

- Tutorials on getting started with Pytorch and Torch text for sentiment analysis

- Sentiment Analysis on Tweets

- Awesome sentiment analysis

- Reading list for sentiment awesome sentiment analysis papers

- Progress in sentiment analysis (papers with code)

- SOTA in sentiment analysis(nlp-progress)